This blog post covers my experience building an Obsidian plugin using ChatGPT – including a minimal backend. The project is open source and available on GitHub:

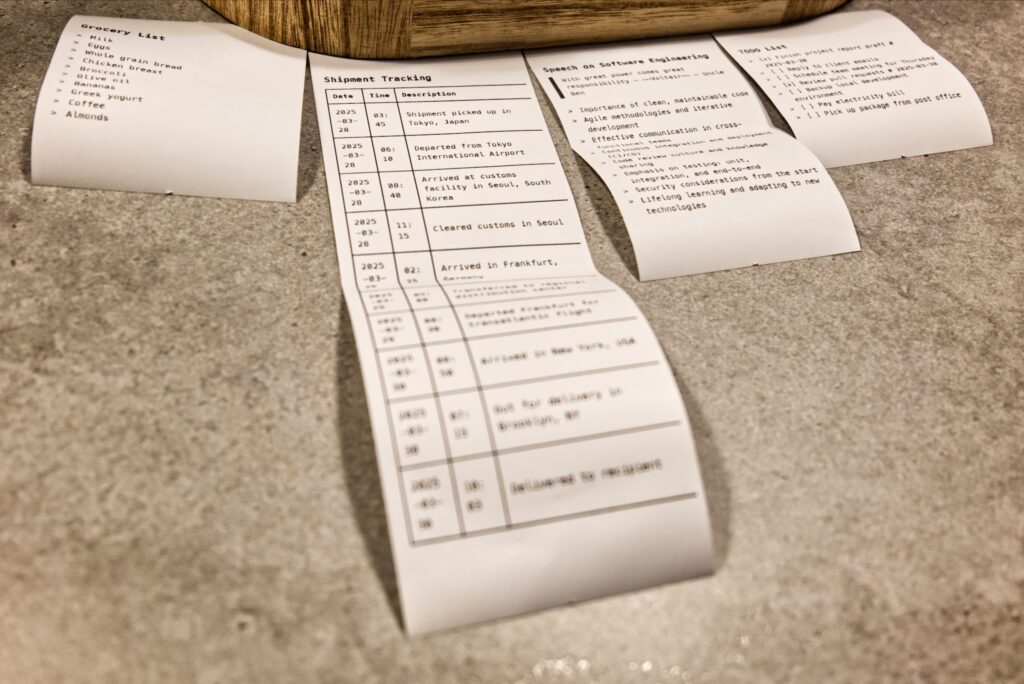

Why that? To solve a real limitation I’ve hit repeatedly. Obsidian on iOS doesn’t support printing or PDF export. That’s an issue, if you want to print short notes, e.g. prep lists for talks, interview questions, or quick todos. To make it even more useful (to me), I don’t print to A4 – I use a thermal receipt printer connected to a Raspberry Pi 4B. It prints on 80 mm continuous paper, ideal for portable, foldable notes that fit in a jacket pocket. Of course, a nice addition would be to support A4, too 🤓.

The resulting notes look like this:

Obsidian has no built-in way to trigger such a print workflow from iOS. So I built a minimal HTTP-based plugin that sends the current note (as markdown) to a backend, which renders it to HTML and then PDF, and prints it. On the server, I use Kotlin with Spring Boot for low boilerplate. Obsidian sends the note via HTTP(S) POST, along with a bearer token for basic auth. The backend is protected either via VPN (WireGuard in my case) or TLS, and checks the token with a servlet filter.

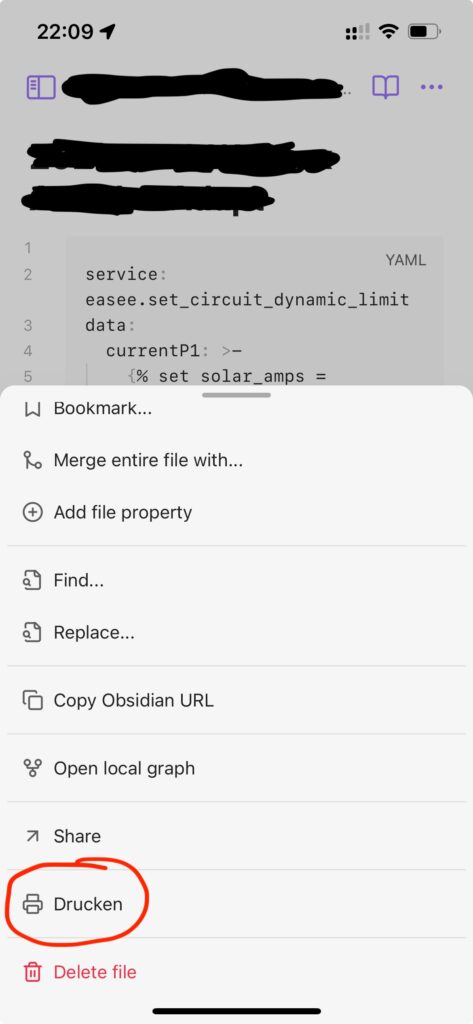

The Obsidian plugin itself is small and works cross-platform. You can configure title, icon, endpoint, and token. Once active, it adds an action to the file menu (right-click on the file tab or the three-dot menu on iOS, respectively):

When triggered, it sends the note to the backend. Please ignore the fact that the menu item is labeled in German, even though the application is set to English — I haven’t localized the plugin yet.

Plugin Development

Plugin development in Obsidian is JavaScript-based. For testing, you can drop the files directly into a vault subdirectory – Obsidian picks them up as a community plugin. If you sync your vault via iCloud or OneDrive, the plugin becomes available on iOS after sync. My plugin is simple enough to work on all platforms.

I asked ChatGPT to generate a basic plugin project. It produced a clean structure but split logic and settings across multiple JS files. Obsidian failed to load the plugin. The Electron dev console pointed to the issue: the import of settings.js in main.js broke. ChatGPT proposed various fixes — looping endlessly if unchecked. It has a Labrador-like urge to please, as noted in another post. Only after I explicitly asked whether multi-file plugins might be unsupported did it suggest bundling the plugin. I chose esbuild as the packager — minimal, fast, and well-suited for the job. Once the model understood the bundling requirement, integrating esbuild was straightforward. Problem solved.

Backend Development

The backend was easier. I reused the setup from an earlier project: Spring Boot with Kotlin and matching dependency versions. ChatGPT handled this part well. The challenge came with converting Markdown to PDF. I had to guide the model to the right libraries and CSS options. The main issue: Java libraries with overloaded methods. ChatGPT struggled with this, especially when called from Kotlin. It couldn’t consistently pick the right method signature.

Overloaded methods – e.g. accepting a File, InputStream, or String – explode the number of valid combinations. LLMs quickly get stuck trying random permutations. Eventually, I found the correct method signature in the docs, pasted it into the prompt, and it worked instantly. A clear reminder: GPT needs strong supervision in real-world JVM use cases.

What’s Next?

I’ll cover strategies for efficient LLM-assisted coding in a separate post. In short: yes, you can absolutely build useful software with LLMs — testable and maintainable. (Don’t get me wrong: the project in this post still needs automated tests and proper docs 😇.) But don’t fall for “vibe coding.” LLMs still need someone who knows what’s going on. Without supervision, this approach breaks — fast.

If that becomes the norm, we’re all in trouble.

Exciting 🤓.

This article has been written with help from an LLM, which may make errors (like humans do 😇).